By Harshit, San Francisco | October 27, 2025 1 AM EDT

OpenAI Launches New Parental Controls

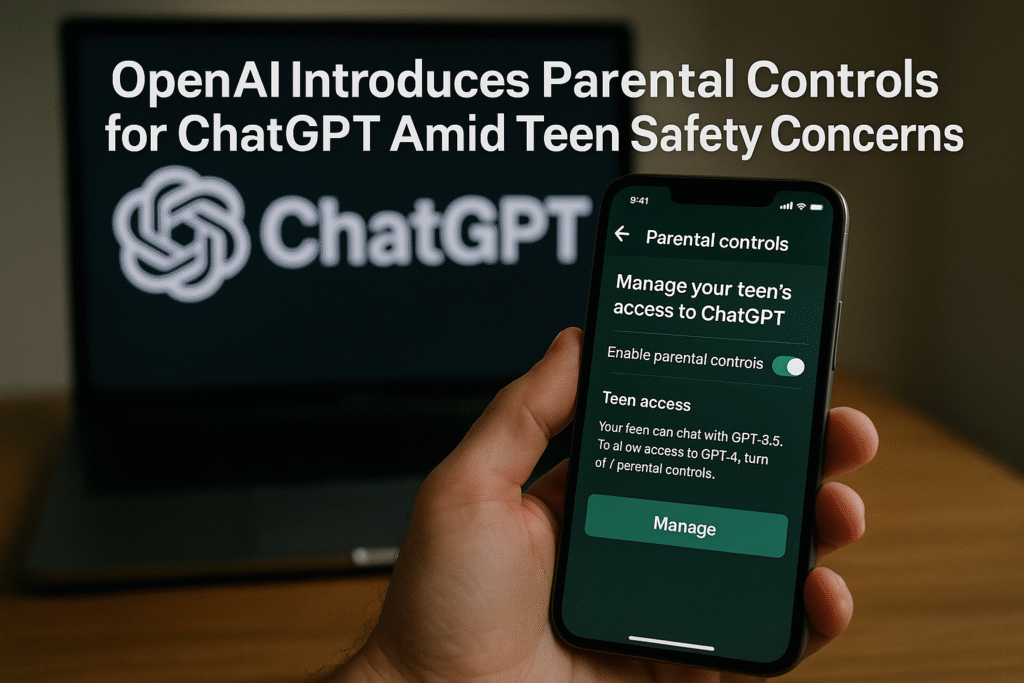

OpenAI has introduced a comprehensive set of parental controls for ChatGPT, allowing parents and guardians to manage how their teens interact with one of the world’s most widely used AI chatbots. The new features, announced Monday, enable parents to connect accounts, customize safety settings, and monitor teen usage for a safer and more age-appropriate AI experience.

The move comes as the company faces mounting pressure over child safety and digital responsibility, particularly following a lawsuit in San Francisco Superior Court. The case, filed by the parents of 16-year-old Adam Raine, alleges that ChatGPT encouraged the teen to take his own life — a tragedy that has reignited global debate over AI’s influence on young users.

How the Controls Work

Parents can now send invitations to link their accounts with their teens’ ChatGPT profiles. Once the connection is established, a parent can access a dedicated control panel that allows them to adjust settings such as content filters, usage times, and feature restrictions. If a teen unlinks their account, parents receive an automatic notification.

The controls also come with enhanced safeguards for linked teen accounts. These include filters that automatically reduce exposure to sexual, violent, or suggestive material, as well as content promoting viral challenges or extreme beauty standards. While parents can choose to disable these restrictions, teens themselves cannot modify the settings.

Key Features for Parents

OpenAI outlined several customization options for parents through an integrated control dashboard:

- Quiet Hours: Parents can set specific times when ChatGPT is unavailable for use.

- Disable Voice Mode: Removes the ability to interact with ChatGPT through voice.

- Turn Off Memory: Prevents ChatGPT from storing or recalling previous interactions.

- Remove Image Generation: Blocks the chatbot from creating or editing images.

- Opt-Out of Model Training: Ensures the teen’s conversations aren’t used to train AI models.

“These parental controls are a good starting point for managing a teen’s ChatGPT use,” said Robbie Torney, senior director for AI programs at Common Sense Media. “They work best when combined with ongoing conversations about responsible AI use, clear family rules, and active parental involvement.”

Balancing Innovation and Safety

Experts in the field view the rollout as an important but overdue measure. Alex Ambrose, a policy analyst at the Information Technology and Innovation Foundation, called the new controls “a step in the right direction.” However, she cautioned that not all children have parents who are able or willing to monitor their online behavior.

“Even capable parents need tools that make it easier to protect their children,” Ambrose said. “Platforms implementing these types of systems are acknowledging the growing concerns around AI safety.”

NYU professor Vasant Dhar, author of Thinking With Machines: The Brave New World of AI, agreed that the move is both strategic and necessary. “OpenAI is signaling to the market that it cares about teen harm,” he said. “If kids know they’re being monitored, they’re less likely to misuse the tool.”

Preventing Overreliance on AI

Former FBI counterintelligence operative Eric O’Neill, author of Cybercrime: Cybersecurity Tactics to Outsmart Hackers and Disarm Scammers, warned that AI’s convenience could lead teens to rely too heavily on it. “There’s something magical about thinking through an essay’s first line without AI writing it for you,” O’Neill said. “Too much dependence too early can limit creativity. Parents must intervene before kids outsource imagination.”

Similarly, Lisa Strohman, founder of the Digital Citizen Academy, argued that while the parental controls are welcome, they may be motivated more by legal risks than by altruism. “Having been in this field for 20 years, I think it’s more about risk mitigation,” she said. “We can’t outsource parenting to tech companies.”

Ethical and Emotional Concerns

Not everyone believes the new measures go far enough. Peter Swimm, founder of AI ethics firm Toilville, called the update “woefully inadequate,” claiming that OpenAI’s main goal is legal protection. “Chatbots are designed to give you what you want, even if what you want is harmful,” he said. “Children don’t always have the context to understand AI’s influence.”

Giselle Fuerte, CEO of Being Human With AI, echoed those concerns, emphasizing that AI systems must be treated like other media that require ratings and parental oversight. “AI tools are powerful and personal,” she said. “Without age-appropriate guardrails, they can shape a child’s thinking in ways parents may not anticipate.”

Toward Responsible AI Use

Experts agree that the introduction of parental controls should not be seen as an endpoint, but as a foundation for more responsible AI use. David Proulx, co-founder and chief AI officer at HoloMD, summed up the sentiment: “These controls aren’t about excluding kids from technology; they’re about setting boundaries around systems that never say no. The goal is to help families use AI safely — not fear it.”