By Harshit

LAS VEGAS, JANUARY 6, 2026 —

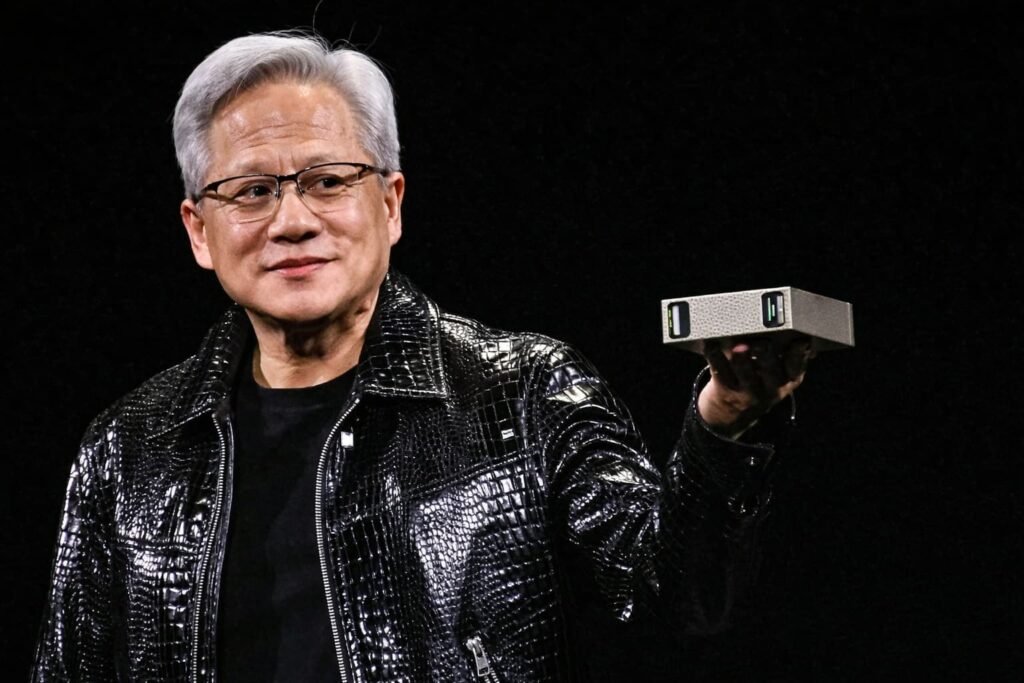

Nvidia on Monday revealed new details about its next-generation AI computing platform, Vera Rubin, with Chief Executive Jensen Huang saying the chips are now in full production and capable of delivering up to five times the artificial intelligence performance of the company’s previous generation when running chatbots and other AI applications.

Speaking at Consumer Electronics Show in Las Vegas, Huang outlined how the Vera Rubin platform is designed to meet surging demand for large-scale AI inference, even as Nvidia faces growing competition from rivals and from major customers building their own custom chips.

A New Architecture for the Inference Era

The Vera Rubin platform consists of six interconnected Nvidia chips and will debut later this year in a flagship server configuration containing 72 graphics processing units (GPUs) and 36 central processing units (CPUs). According to Nvidia, these servers can be linked together into large “pods” comprising more than 1,000 Rubin GPUs.

Huang said the architecture is optimized for generating “tokens” — the fundamental unit of AI systems — and could improve token-generation efficiency by as much as tenfold compared with previous designs.

“This is how we were able to deliver such a gigantic step up in performance, even though we only have 1.6 times the number of transistors,” Huang said, noting that the gains come from architectural changes rather than brute-force scaling.

A key part of that improvement comes from Nvidia’s use of a proprietary data format, which the company hopes will become an industry standard over time. The approach allows the Rubin platform to extract more performance from existing silicon, particularly for long-running conversational AI workloads.

Focus Shifts From Training to Serving AI

While Nvidia continues to dominate the market for training large AI models, the company now faces intensifying competition in AI inference — the process of deploying trained models to hundreds of millions of users.

Traditional rivals such as Advanced Micro Devices are pushing alternative accelerators, while cloud giants like Google are increasingly designing their own in-house AI chips to reduce dependence on Nvidia hardware.

Huang’s CES keynote reflected that shift. Much of the presentation focused on how Rubin chips handle real-world AI workloads, including long conversations and memory-intensive interactions.

To support these use cases, Nvidia introduced a new feature called context memory storage, a layer of storage technology designed to help AI systems respond more quickly during extended conversations without reprocessing massive amounts of data.

Networking and Data Center Competition

Nvidia also announced a new generation of networking switches featuring co-packaged optics, a technology that improves energy efficiency and bandwidth when connecting thousands of machines into a single AI cluster.

This move places Nvidia in more direct competition with networking specialists such as Broadcom and Cisco Systems, as data center operators increasingly view networking as a critical bottleneck for AI performance.

Early Customers and Cloud Adoption

Nvidia said CoreWeave will be among the first customers to deploy Vera Rubin systems. The company also expects major cloud providers — including Microsoft, Amazon, Oracle, and Google — to adopt the platform.

These partnerships underscore Nvidia’s strategy of remaining deeply embedded across the cloud ecosystem, even as some customers experiment with proprietary chips.

Open-Source Push in Autonomous Driving

Beyond data centers, Huang highlighted new software for autonomous vehicles, including broader availability of Alpamayo, a system designed to help self-driving cars explain and document their decision-making processes.

In a notable move, Nvidia plans to open-source both the models and the training data behind Alpamayo, allowing automakers to independently audit and evaluate performance.

“Only in that way can you truly trust how the models came to be,” Huang said.

Geopolitics and China Demand

The announcements come amid continued geopolitical scrutiny of AI hardware exports. Nvidia executives acknowledged strong demand in China for the company’s older H200 chips, which U.S. President Donald Trump recently allowed to be exported.

Chief Financial Officer Colette Kress said Nvidia has applied for export licenses to ship H200 chips to China and is awaiting approvals from U.S. and other governments.

Meanwhile, Nvidia continues to emphasize that newer platforms like Rubin significantly outperform previous generations, reinforcing the company’s technological lead even as older chips circulate globally.

Expanding Talent and Competitive Pressure

Last month, Nvidia also acquired talent and chip technology from startup Groq, including engineers who previously helped Google design its own AI processors. Huang said the acquisition would not disrupt Nvidia’s core business but could lead to new product lines in the future.

As competition intensifies, the Vera Rubin platform represents Nvidia’s clearest signal yet that the company is preparing for an AI market increasingly defined not just by training models — but by running them at global scale.