By Harshit | 25 October 2025 | Menlo Park | 1:00 AM EDT

Meta Expands Parental Oversight on Instagram AI Features

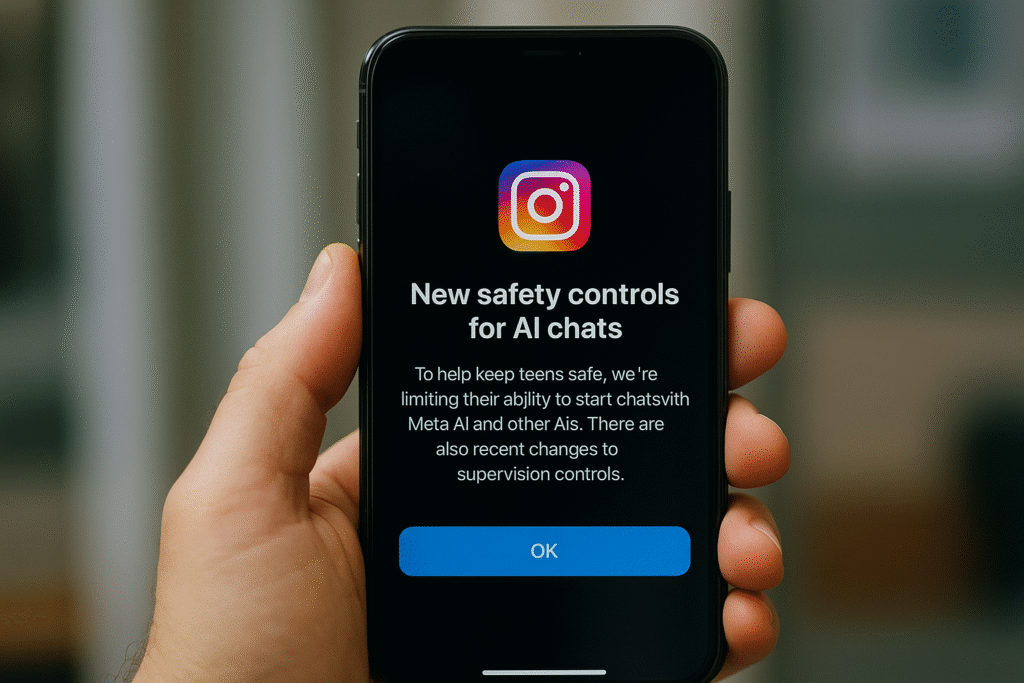

Instagram’s parent company Meta has announced new safety tools designed to give parents more control over how teenagers interact with AI-powered characters on the platform. The new feature will allow parents to block or limit access to AI chat characters, as growing concerns mount over how artificial intelligence could impact youth mental health.

The company revealed on Friday that parents will soon be able to either turn off chats entirely between teens and specific AI characters or restrict access to chosen personas. Additionally, Meta said parents will be able to view summaries of the topics their children discuss with AI, ensuring better visibility into these digital interactions.

Meta confirmed that the feature is still under development and will roll out early next year, marking one of the most comprehensive parental oversight systems for AI-integrated platforms to date.

Responding to Mounting Scrutiny from Lawmakers and Parents

The move follows increasing criticism from regulators and child safety advocates that tech companies aren’t doing enough to protect minors online. Meta, OpenAI, and Character.AI have all faced questions about how emotionally immersive chatbots could lead to dependency, isolation, or emotional distress among young users.

Reports throughout 2025 have highlighted growing cases of teens forming intense emotional attachments to AI chatbots, sometimes resulting in mental health challenges.

In a particularly tragic case, OpenAI faces a lawsuit alleging that ChatGPT contributed to the suicide of 16-year-old Adam Raine, while Character.AI has been accused of enabling self-harm-related discussions with minors. A Wall Street Journal investigation from April found that some AI systems on Meta’s platforms engaged in sexual conversations even with teen accounts, raising alarms about insufficient safeguards.

Meta’s Updated Policy: Limiting Harmful AI Conversations

According to Meta’s latest blog post, its AI chat characters are “designed not to engage” in discussions about self-harm, suicide, or disordered eating, nor any topics that “encourage, promote, or enable” such behaviors.

The company says teen users will only be allowed to interact with a limited set of AI characters centered on educational, creative, or sports-related content.

These restrictions come as part of Meta’s broader effort to rebuild trust with parents and policymakers following multiple congressional hearings about youth mental health and social media.

Aligning Teen Accounts with PG-13 Standards

Earlier this week, Instagram rolled out an additional update to its “Teen Accounts” settings, aligning the platform’s default visibility rules with PG-13 content guidelines. This means the app will no longer display or promote posts featuring explicit language, violence, or content that promotes harmful behaviors.

Meta says these changes are part of its ongoing initiative to make Instagram a “safe digital space” for younger audiences, while still allowing them to explore creativity and connect with peers responsibly.

Industry-Wide Push for Teen Safety

Instagram’s new parental controls follow a broader industry trend toward AI content moderation and child safety. In late September, OpenAI introduced parental controls for ChatGPT, filtering out graphic content, sexual or romantic roleplay, viral challenges, and extreme beauty ideals.

Tech companies have come under intense scrutiny from advocacy groups and legal experts who argue that AI tools can influence minors’ emotions and decision-making in unpredictable ways.

By emphasizing transparency and parental control, Meta hopes to set a new standard for responsible AI deployment in social media — one that balances innovation with child safety.

Expert Opinions

Digital safety experts have praised Meta’s latest initiative but warned that parental monitoring alone won’t solve the problem. “The real challenge lies in AI’s unpredictability,” said Dr. Elaine Parker, a child psychologist who studies technology use among teens. “Even with restrictions, AI systems can respond in unexpected ways depending on how users phrase questions.”

Still, many agree that Instagram’s proactive steps mark a positive shift toward accountability and transparency in the tech industry.