By Harshit

SAN FRANCISCO, November 28, 2025 — 10:45 AM PST

Artificial intelligence has become the foundation of today’s technology—from chatbots and self-driving cars to medical imaging and stock market predictions. But behind all of this AI power lies a tiny piece of hardware: the AI chip. Companies like Nvidia dominate this space, producing the GPUs that train and run the world’s most advanced models.

AI chips may sound complicated, but their core idea is surprisingly simple. This explainer breaks down how these chips work, why they’re different from normal computer processors, and why Silicon Valley cannot stop buying them.

What Makes an AI Chip Different from a Normal CPU?

Most computers run on CPUs (central processing units)—the chip responsible for everyday tasks like browsing the web, opening apps, or playing videos. CPUs are great at doing one thing at a time very quickly.

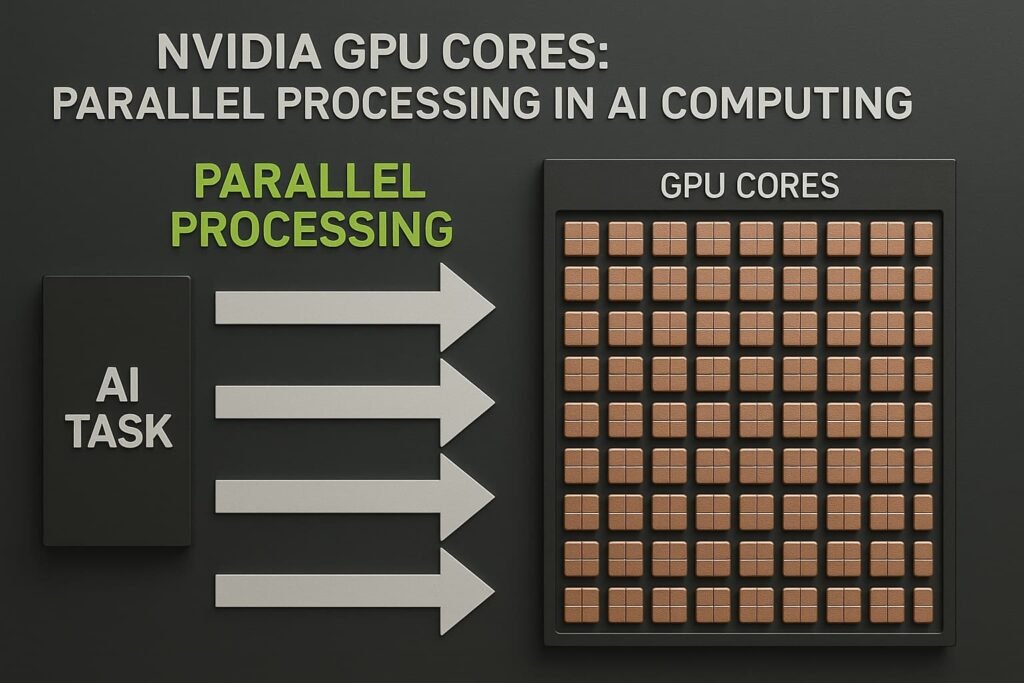

But AI systems need something very different:

the ability to do thousands of small calculations at the exact same moment.

This is where Nvidia’s chips come in.

✔ CPUs = Speed

✔ GPUs = Massive parallel processing

An AI model, like those used in ChatGPT or image recognition, is made up of billions of mathematical operations. A CPU would take days or weeks to complete them. A GPU does it in hours because it splits those operations into thousands of pieces and solves them at once.

The Secret: Parallel Processing

Nvidia’s AI chips—especially the H100, H200 and upcoming B-series—are built around the idea of parallelism. Instead of having 8 or 16 cores like a CPU, GPUs have thousands of smaller cores, each designed to handle a tiny part of a task simultaneously.

Imagine:

- A CPU is like a single expert writing an entire book alone.

- A GPU is like 5,000 writers each handling one paragraph at the same time.

That’s why GPUs are perfect for:

- Training large language models

- Processing huge image datasets

- Running simulations

- Powering autonomous vehicles

- Medical diagnosis through AI imaging

Tensor Cores: Nvidia’s Superpower

What truly makes Nvidia’s chips unique is something called Tensor Cores.

These are special hardware units designed specifically for AI math—especially matrix multiplication, the core operation inside every neural network.

Neural networks = tons of matrix math

Tensor Cores = built to multiply matrices extremely fast

This is why Nvidia still leads the AI chip market even as competition from AMD, Intel, and custom chips grows. Their GPUs aren’t just powerful—they are built precisely for AI math, making them dramatically faster for modern models.

Training vs. Inference: Two Ways AI Uses Chips

AI chips work in two main stages:

1. Training (Heavy Work)

This is when an AI model learns from enormous datasets.

Examples:

- ChatGPT learning language patterns

- Self-driving cars learning road rules

- Medical models learning to detect cancer

Training requires massive amounts of power, memory, and fast parallel computation—making GPUs essential.

2. Inference (Everyday Use)

This is when the trained AI model responds to inputs.

Examples:

- Chatbot answering your question

- A phone recognizing your face

- Netflix recommending movies

Inference requires cheaper, smaller chips, but Nvidia still dominates this part of the market with its L-series and new inference-optimized chips.

Why AI Chips Need Huge Memory

AI models store enormous amounts of data internally—sometimes hundreds of gigabytes.

To keep everything running smoothly, AI chips need:

- High-bandwidth memory (HBM)

- Fast data pathways

- Efficient cooling

This is why Nvidia’s AI chips look nothing like laptop processors. They come with large memory stacks, advanced cooling systems, and power requirements so high that entire data centers must be redesigned around them.

Why AI Chips Are So Expensive

A single Nvidia H100 chip can cost $25,000 to $40,000 depending on configuration. Full clusters used by companies like OpenAI, Google, Amazon, Meta, and Anthropic cost hundreds of millions of dollars.

The main reasons:

✔ Expensive materials and advanced manufacturing

✔ Massive demand from AI companies

✔ Limited supply

✔ Specialized architecture

✔ Huge research and development cost

AI startups often raise billions of dollars just to buy enough GPUs.

How AI Chips Will Evolve

Analysts expect massive changes over the next five years:

- Custom-built AI chips from OpenAI, Amazon, Google, Meta

- Smaller, cheaper inference chips for home devices

- AI-integrated smartphones and PCs

- Quantum computing acceleration for AI

- New generations of Nvidia GPUs focusing on efficiency

But for now, Nvidia remains the global leader, responsible for powering most of the AI industry.

Why AI Chips Matter to Everyday People

Even though you never see them, AI chips affect your daily life:

- Google search results

- Auto-correct on your phone

- Netflix recommendations

- Weather prediction

- Traffic navigation

- Medical scans

- Fraud detection

As AI grows, these chips will become as essential as electricity.